Hi!

Server: AMD Rome 64C/128T, 2xNVME SSDs->Linux Softraid->LVM (some LVs use DRBD)

dom0: Ubuntu 2204, 16vCPUs, dom0_vcpus_pin

domU 1: PV, Ubuntu 2204, 80vCPUs, no pinning, load 30, Postgresql-DB Server

domU 2: PV, Ubuntu 2204, 16vCPUs, no pinning, load 1-2, webserver

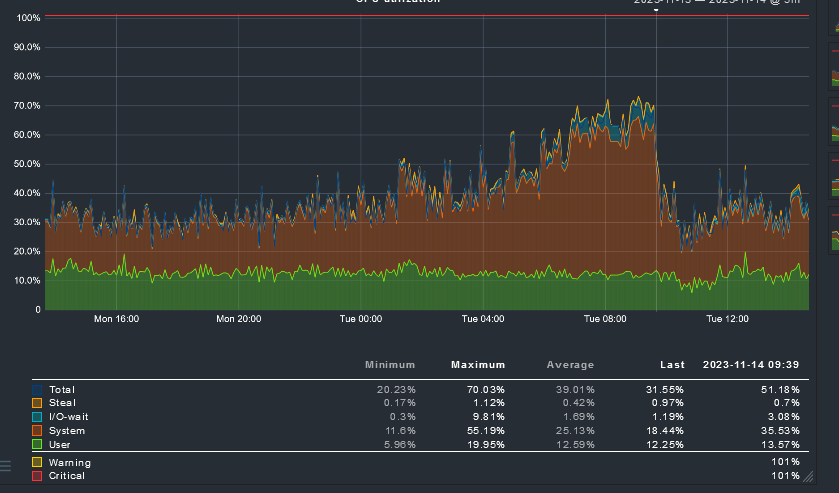

For whatever reason, today the DB-server was getting slow. We saw:

-

increased load

-

increased CPU (only "system" increased)

-

reduced disk IOps

-

increased disk IO Latency

-

no increase in userspace workload

Still we do not know if the reduced IO performance was the cause of the issue, or the consequence of the issue. We reduced load from the DB, dis-/reconnected DRBD, fstrim in domU. After some time things were fine again.

To better understand what was happening maybe someone can answer my questions:

a) I used the "perf top" utility in the domU and it reports something like:

76.23% [kernel] [k] xen_hypercall_sched_op

4.14% [kernel] [k] xen_hypercall_xen_version

0.97% [kernel] [k] pvclock_clocksource_read

0.84% perf [.] queue_event

0.81% [kernel] [k] pte_mfn_to_pfn.part.0

0.57% postgres [.] hash_search_with_hash_value

So most of CPU time is consumed by xen_hypercall_sched_op. IS it normal that xen_hypercall_sched_op

basically eats up all CPU? Is this an indication of some underlying problem? Or is that normal?

b) I know that we only have CPU pinning for the dom0, but not for the domU (reason: some legacy thing that was not implemented correctly probably)

# xl vcpu-list

Name ID VCPU CPU State Time(s) Affinity (Hard / Soft)

Domain-0 0 0 0 -b- 66581.0 0 / all

Domain-0 0 1 1 -b- 60248.8 1 / all

…

Domain-0 0 14 14 -b- 65531.2 14 / all

Domain-0 0 15 15 -b- 68970.9 15 / all

domU1 3 0 74 -b- 113149.8 all / 0-127

…

b1) So, as the VMs are not pinned, it may happen that the same CPU is used for the dom0 and the domU. But why? There are 128vCPUs available, and only 112vCPUs used. Is XEN not smart enough to use all vCPUs?

b2) Sometimes I see that 2 vCPUs use the same CPU? How can that be that a CPUs is used concurrently for 2 vCPUs? And why, as there are plenty of vCPUs left?

root@cc6-vie:/home/darilion# xl vcpu-list|grep 102

Name ID VCPU CPU State Time(s) Affinity (Hard / Soft)

domU1 3 67 102 r-- 119730.3 all / 0-127

domU1 3 77 102 -b- 119224.1 all / 0-127

Thanks

Klaus

root@cc6-vie:/home/darilion# xl info

host : cc6-vie

release : 5.10.0-26-amd64

version : #1 SMP Debian 5.10.197-1 (2023-09-29)

machine : x86_64

nr_cpus : 128

max_cpu_id : 255

nr_nodes : 1

cores_per_socket : 64

threads_per_core : 2

cpu_mhz : 2000.008

hw_caps : 178bf3ff:76d8320b:2e500800:244037ff:0000000f:219c91a9:00400004:00000780

virt_caps : pv hvm hvm_directio pv_directio hap shadow gnttab-v1 gnttab-v2

total_memory : 262006

free_memory : 87382

sharing_freed_memory : 0

sharing_used_memory : 0

outstanding_claims : 0

free_cpus : 0

xen_major : 4

xen_minor : 17

xen_extra : .1-pre

xen_version : 4.17.1-pre

xen_caps : xen-3.0-x86_64 hvm-3.0-x86_32 hvm-3.0-x86_32p hvm-3.0-x86_64

xen_scheduler : credit2

xen_pagesize : 4096

platform_params : virt_start=0xffff800000000000

xen_changeset :

xen_commandline : placeholder dom0_mem=8192M,max:8192M dom0_max_vcpus=16 dom0_vcpus_pin gnttab_max_frames=256 no-real-mode edd=off

cc_compiler : x86_64-linux-gnu-gcc (Debian 10.2.1-6) 10.2.1 20210110

cc_compile_by : pkg-xen-devel

cc_compile_domain : lists.alioth.debian.org

cc_compile_date : Mon Feb 13 10:13:39 UTC 2023

build_id : d62435c0245f36ee9a3436272135b4c5706b2bfc

xend_config_format : 4